Lxml Web Scraping

Pandas makes it easy to scrape a table (<table> tag) on a web page. After obtaining it as a DataFrame, it is of course possible to do various processing and save it as an Excel file or csv file.

Our web scraping tutorials are usually written in Python using libraries such as LXML, Beautiful Soup, Selectorlib and occasionally in Node.js. The full source code is also available to download in most cases or available to be easily cloned using Git. I am Yasa James, 14, and I am new to web scraping. I am trying to extract titles and links from this website.As an so called 'Utako' and a want-to-be programmer, I want to create a program that extract links and titles at the same time. Python web-scraping lxml.html. Share improve this question follow asked Oct 22 at 6:50. Raunanza Raunanza. 5 1 1 bronze badge. Add a comment 3 Answers Active Oldest Votes. You need to make some modifications in your code to get the xpath. Below is the code.

In this article you’ll learn how to extract a table from any webpage. Sometimes there are multiple tables on a webpage, so you can select the table you need.

Related course:Data Analysis with Python Pandas

Pandas web scraping

Install modules

It needs the modules lxml, html5lib, beautifulsoup4. You can install it with pip.

pands.read_html()

You can use the function read_html(url) to get webpage contents.

The table we’ll get is from Wikipedia. We get version history table from Wikipedia Python page:

This outputs:

Lxml Web Scraping Table

Because there is one table on the page. If you change the url, the output will differ.

To output the table:

You can access columns like this:

Pandas Web Scraping

Once you get it with DataFrame, it’s easy to post-process. If the table has many columns, you can select the columns you want. See code below:

Then you can write it to Excel or do other things:

Related course:Data Analysis with Python Pandas

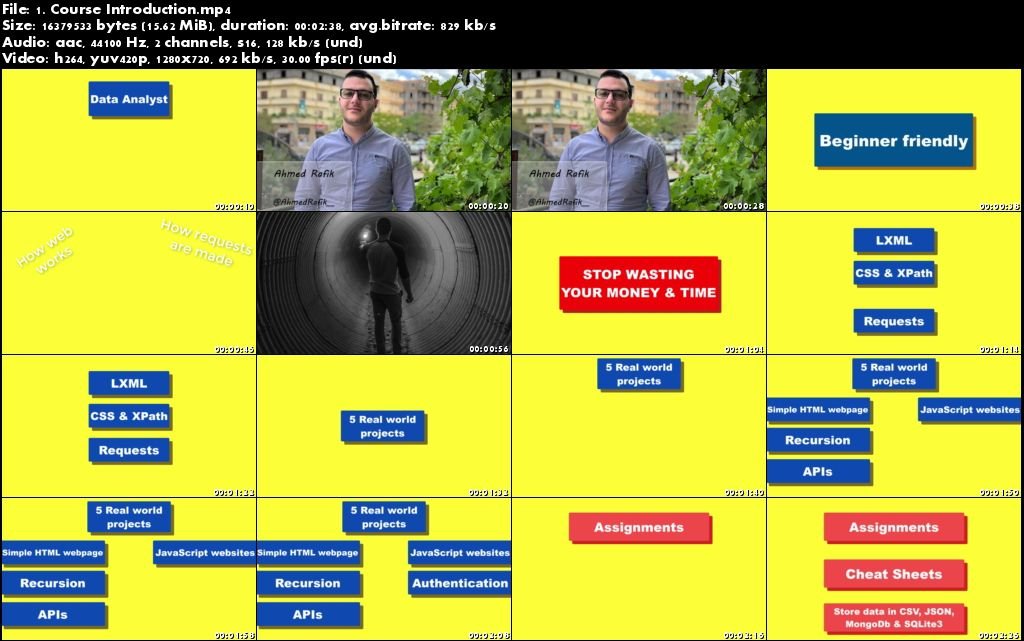

lxml and Requests¶

lxml is a pretty extensive library written for parsingXML and HTML documents very quickly, even handling messed up tags in theprocess. We will also be using theRequests module instead of thealready built-in urllib2 module due to improvements in speed and readability.You can easily install both using pipinstalllxml andpipinstallrequests.

Let’s start with the imports:

Next we will use requests.get to retrieve the web page with our data,parse it using the html module, and save the results in tree:

(We need to use page.content rather than page.text becausehtml.fromstring implicitly expects bytes as input.)

tree now contains the whole HTML file in a nice tree structure whichwe can go over two different ways: XPath and CSSSelect. In this example, wewill focus on the former.

XPath is a way of locating information in structured documents such asHTML or XML documents. A good introduction to XPath is onW3Schools .

There are also various tools for obtaining the XPath of elements such asFireBug for Firefox or the Chrome Inspector. If you’re using Chrome, youcan right click an element, choose ‘Inspect element’, highlight the code,right click again, and choose ‘Copy XPath’.

After a quick analysis, we see that in our page the data is contained intwo elements – one is a div with title ‘buyer-name’ and the other is aspan with class ‘item-price’:

Knowing this we can create the correct XPath query and use the lxmlxpath function like this:

Let’s see what we got exactly:

Lxml Web Scraping Tutorial

Congratulations! We have successfully scraped all the data we wanted froma web page using lxml and Requests. We have it stored in memory as twolists. Now we can do all sorts of cool stuff with it: we can analyze itusing Python or we can save it to a file and share it with the world.

Lxml Web Scraping

Some more cool ideas to think about are modifying this script to iteratethrough the rest of the pages of this example dataset, or rewriting thisapplication to use threads for improved speed.